A Visual Introduction to the Past, Present, and Future of Light Field Technology

Today’s Content Creation Methods

Developers use three primary methods to create mobile or VR/AR/MR applications. Each content approach has unique benefits and tradeoffs, often based on their final display devices or specific application requirements.

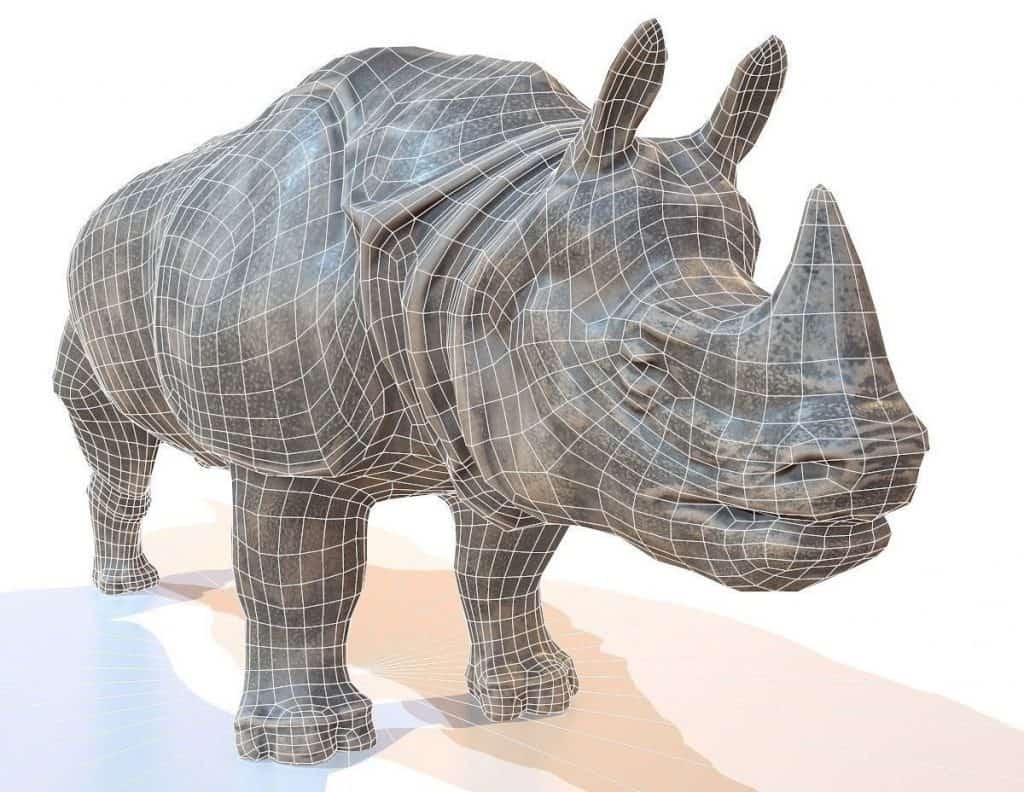

3D Models – Craft a representation of an object out of polygons.

3D Models – Craft a representation of an object out of polygons.

Photogrammetry – Take many photos of an object and use software to generate a 3D model of this information.

Video – Film an object.

If I’m building a First Person Shooter I’d likely create the whole game as complex arrangements of 3D models. If I wanted to give hyper realistic representations of real-world places I may choose to utilize photogrammetry. And if I’m working on a 360 documentary I’d likely use video.

Many apps use a seamless combination of these techniques together to play to their unique strengths.

What Are Light Fields?

Think of a light field like a magic window into another world.

This window allows a user to look within from any perspective and see the content on the other side change. A user can get closer to the window or move far to one side and see different information, just like real life. If the user is very close to the window they can be completely immersed in this other reality. When they reach the edge of the window they won’t see any more content. And the creator of a light field gets to decide how large or small that window is, based on their specific uses.

A light field volume creates images taken from every viewable position within a defined space. When your head moves, the correct images are swapped in real time. If you’re in VR, like the example above, separate images are swapped per eye to give the illusion of depth.

Why Do Light Fields Matter?

Light field technology is exciting because it adds a 4th content display option to our list. They’re are a very old idea that just haven’t been viable until recently.

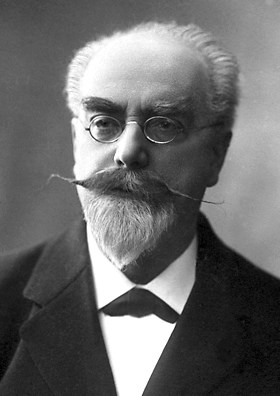

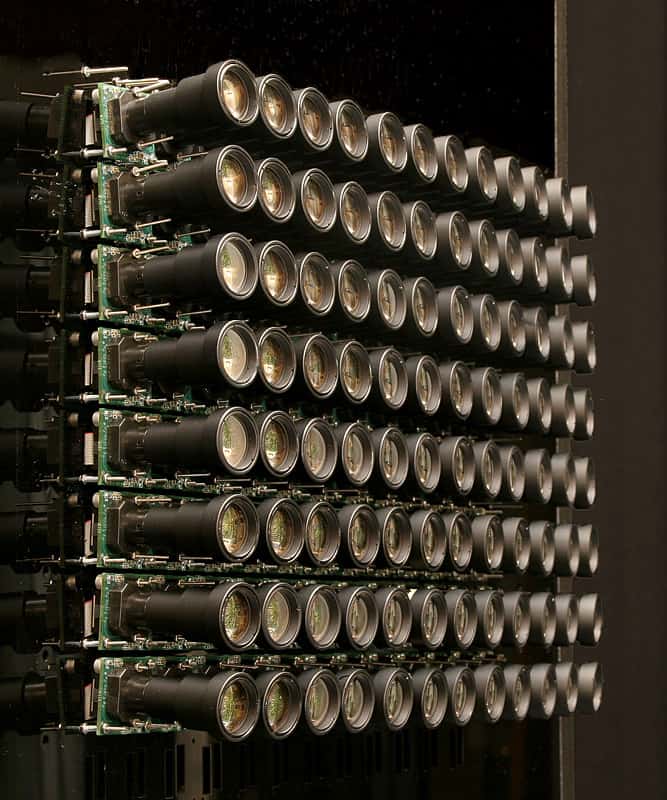

Invented way back in 1908. Gabriel Lippman won a Nobel Prize for his ‘Photographie intégrale’, also known as Integral Imaging. This invention was an idea of a lens that was made up of many tiny lenses. This allows each lens to capture a slightly different view. Lippman’s original theory, now called a bug eye lens or micro lens array, only had 12 lenses.

Yet, despite a Nobel Prize, Lippman never actually built a functioning camera! And while his idea was revolutionary, the technique remained an impossibly fringe concept for decades. Capturing this many images at the same moment in time, in high-quality, was just beyond the emerging technology of early photography. The closest commercial use were Stereoscopes, which while insanely popular, but only showed a fixed stereo image.

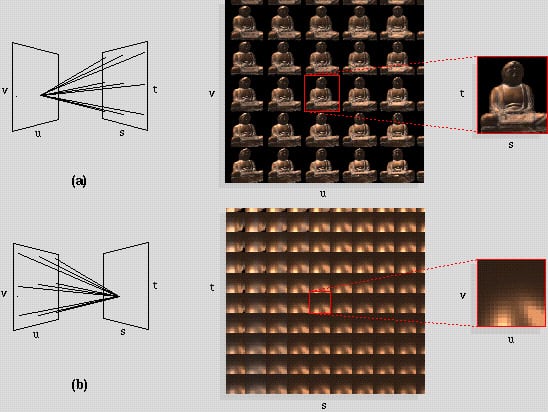

Video from the pioneering 1996 SIGGRAPH Paper ‘Light Field Rendering’ by Marc Levoy and Pat Hanrahan

In the 1990’s there was a rebirth in exploring light fields. The dawn of personal computers and the creation of practical computer graphics allowed the first real captures to find life. Innovation hubs like SIGGRAPH allowed these once niche ideas to have a forum. Yet, just as decades before, the tech was ahead of its time.

What Are The Benefits of Light Fields?

This content creation approach has the benefit of video in that it is 100% representation of what is captured, is high resolution, and is instant to capture, plus we can see in stereo and can move within this content, to a predefined degree.

Real world content can be captured by a micro lens array, which is a fancy way of saying a giant camera with a bunch of lenses. Or even a single or multiple lenses manipulated in space very quickly, as seen above.

Or content can be computer graphics (CGI). While these could be rendered as 3D objects, there are limitations to the computational power of modern devices. Light fields allow the highest fidelity of models, textures, lighting, and reflections which would not be possible in real time.

It is important to note that a light field is really an image floating in space which swaps the right image for the user’s specific location. This means that if we can quickly swap the right image, very complex visuals can occur with almost no CPU or GPU cost. These light field captures are also technically Holographic. That means they content all possible views within a preset range you define. How big is the window? How deep is the window? Do you restrict X or Y movement? All is in a creator’s control.

An example of light field parallax from ‘Light Field Video Capture Using a Learning-Based Hybrid Imaging System’ by Ting-Chun Wang, Jun-Yan Zhu, Nima Khademi Kalantari, Alexei A. Efros, and Ravi Ramamoorthi (2017)

Light field captures have parallax, an overlap of object depth based on head movement. This increases immersion and presence, and isn’t available in 360 videos. We’re also able to animate between light fields or capture light field sequences, just like film.

An example of animated light fields from ‘Real-time Rendering with Compressed Animated Light Fields’ by Disney Research. Authored by Charalampos Koniaris, Maggie Kosek, David Sinclair, and Kenny Mitchell (2017)

Captures can have higher quality reflections, complex lighting, or realistic physics which wouldn’t be possible in real-time 3D graphics. This is possible because we’re just dealing with playing back previously captured pixel data.

An example of adjusting camera focus from ‘Light Field Video Capture Using a Learning-Based Hybrid Imaging System’ by Ting-Chun Wang, Jun-Yan Zhu, Nima Khademi Kalantari, Alexei A. Efros, and Ravi Ramamoorthi (2017)

Light fields focus or aperture can be adjusted on the fly based on depth as well, versus a video which needs to be in focus at the time of filming. This is because light fields can estimate depth based on the data acquired.

An example of light field interaction by Mark Bolas, Director for Mixed Reality Research at USC Institute for Creative Technologies and Associate Professor, USC School of Cinematic Arts (2016)

Just like 3D models, multiple light field captures can be incorporated together and can be manipulated. This interactivity is yet another massive improvement over static video. Manipulate size, position, change light sources, or swap out light field captures completely, the possibilities are endless for creators. Or you can mix light fields with 3D models, film, or photogrammetry to increase the illusion of reality.

But light fields have another advantage, and that is that most of the data between slight variations isn’t unique. And so, most of the data isn’t needed and can be removed. What was 1,000 high quality photos of small movements can be compressed to be a fraction of this size by removing this redundant visual info.

Finally, you may have noticed that we’ve mentioned that light fields are ideal for AR, VR, MR, and even mobile. Why? Each platform now allows you to understand where you are in X, Y, and Z dimension. Recent advancements like ARkit and ARCore bring new life to mobile apps, making light fields a viable mobile medium.

What are the Disadvantages of Using Light Field Technology?

The main reason light fields haven’t worked in the past is that they require a massive amount of high quality pixel based information, whether that information is space on a film negative from a camera or bits on a hard drive from a virtual camera. The more movement in a scene means more individual captures of this information. Think of this as 100, or even 100,000, different high res images of the same exact scene with slight variations. And while they can capture animated content, this is exponentially as large in file size as a static image. This had made both capture and mass delivery a challenge.

There also hasn’t been a great way to track a viewer’s X, Y, or Z position with fidelity. It won’t feel like you’re “really there” if the proper image doesn’t appear with your movements. Modern mobile, AR, and VR devices have this tracking built-in.

Since the content captured will be restricted in X or Y, it is best to capture content where the edges aren’t visible. So, light fields are not useful for everything, but very targeted use cases.

While compression of light fields is possible, it remains mainly theory today. There is no ‘JPEG’ format of light fields which compresses the content based on content creator control.

And finally, a technical note, these aren’t truly volumetric (aka 3D). They’re faking 3D. If we try to go past the window of our capture area the illusion disappears.

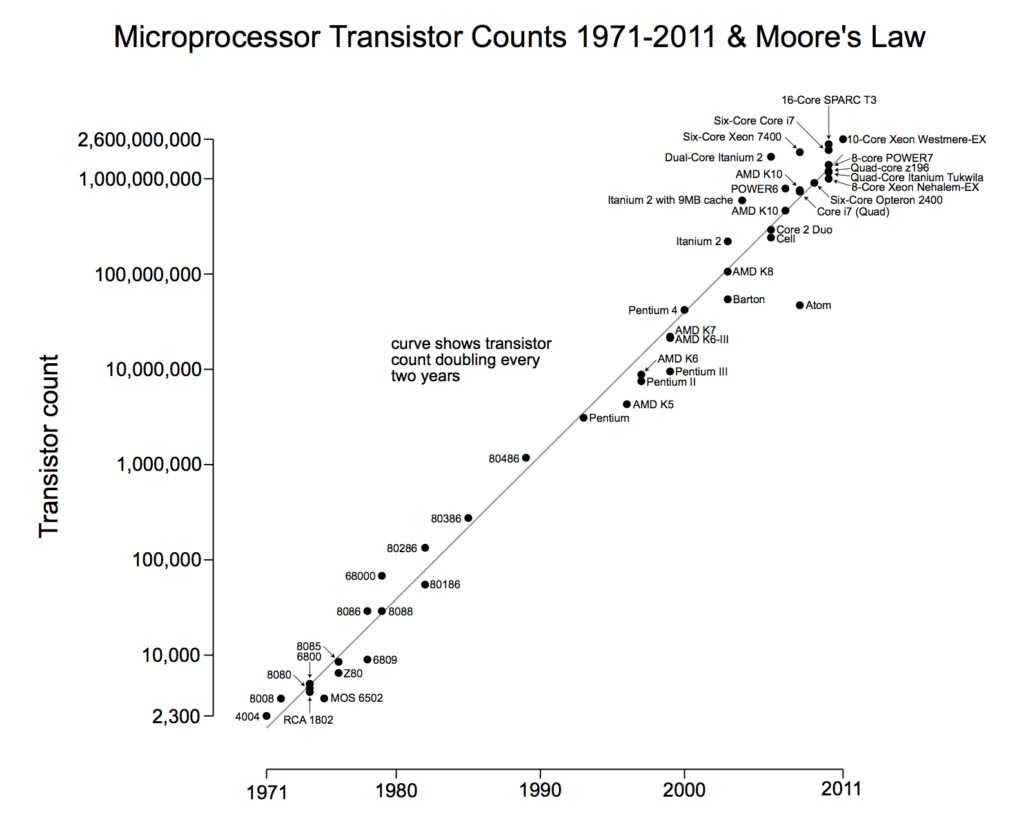

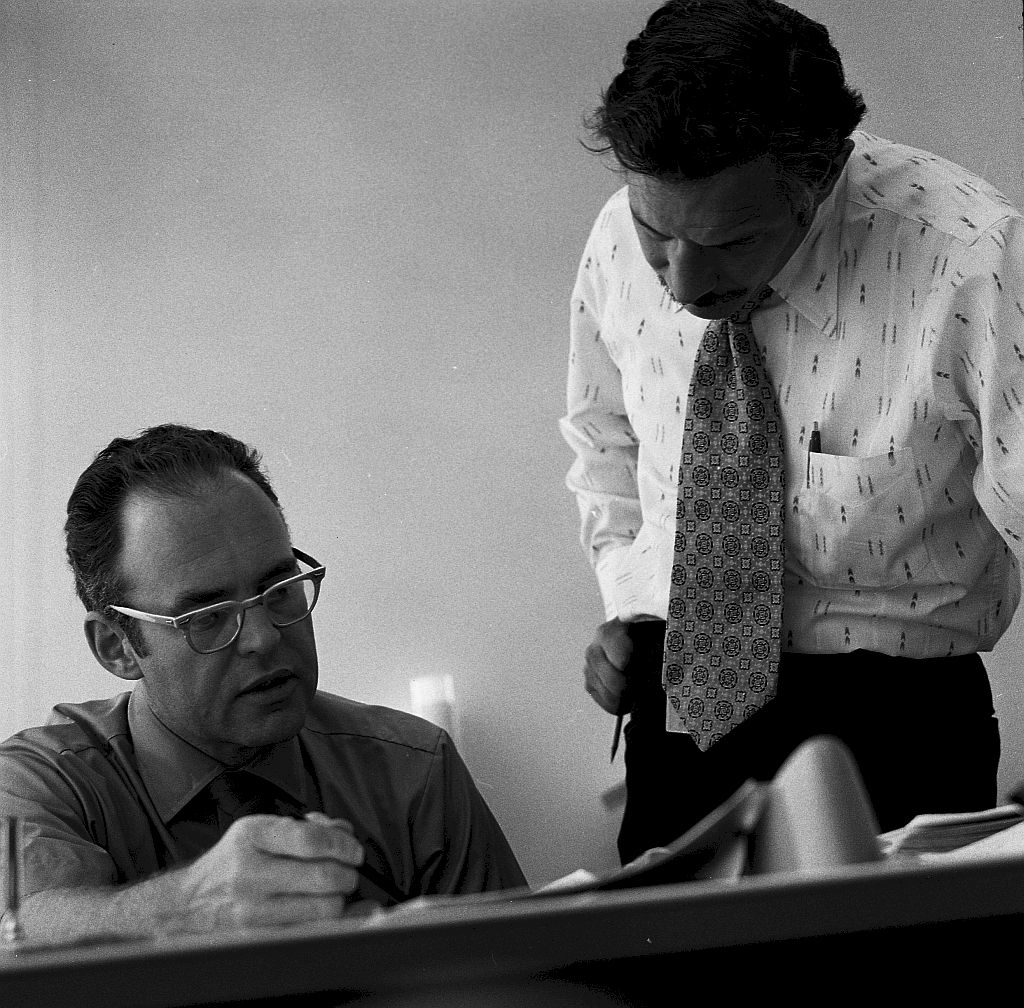

What is Moore’s Law and Why Are Light Fields Relevant Now?

Gordon Moore, founder of Fairchild Semiconductor and Intel, proposed that the number of transistors on circuit chips would double every year for the next ten years. Over 50 years later and “Moore’s Law” is still holding strong, allowing the magic in our personal computers to improve at an exponential rate.

But Moore’s Law relates to more than the power of a computer. Other computer components saw the same exponential progress. Over the past decade we’ve watched as graphics chips became smaller. Batteries became smaller and lasted longer. Everything became lighter. Each of these has made new inventions like the mobile phone, tablet, and wireless VR/AR device a possibility.

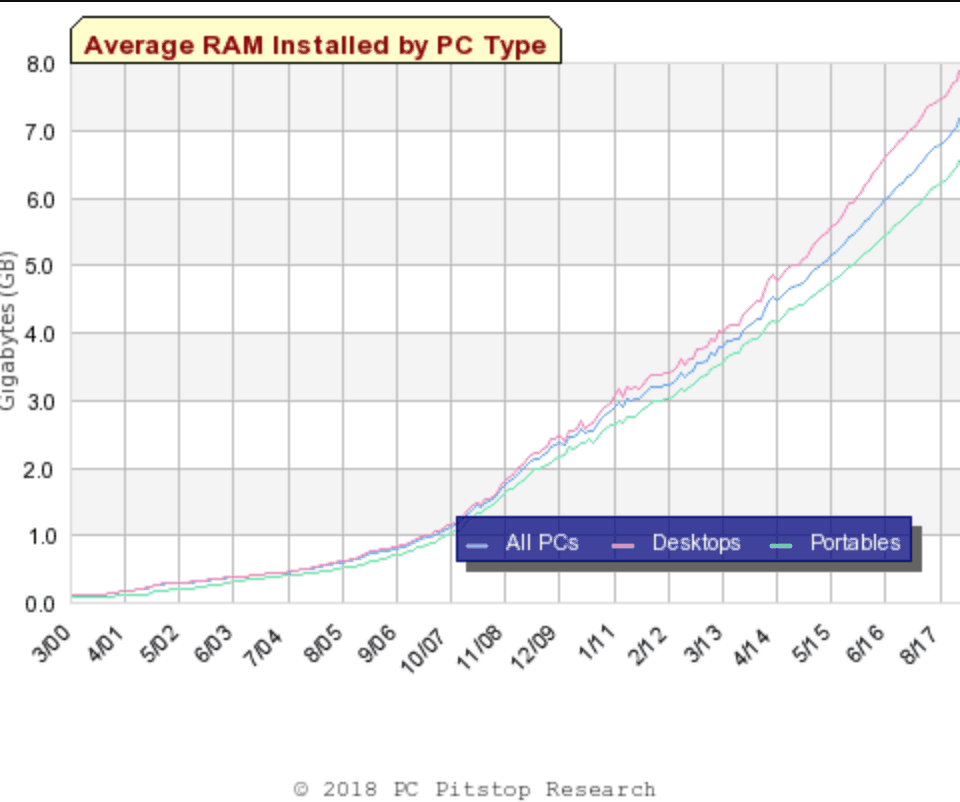

RAM, the ability for your computer to hold information ready for access, is one of the secret winners. And RAM is starting to allow new technological offerings we hadn’t dreamed of on mobile. Like Light Fields.

RAM on new mobile phones (like the Samsung Galaxy S9) has now increased to 4GB. To put this into perspective, that is equal to the average RAM on desktops only 4 years ago. Mobile devices and AR/VR hardware can rely on both a speedy graphics pipeline, but a large amount of application memory. This means light fields are more viable with each passing day.

How Can I Implement Light Fields?

Our team had the same question in 2016. We began exploring light field technology as a fresh technique to develop photorealistic content, captured instantly, that allowed user movement. We were so excited! Then we hit a wall.

There are no public codecs, players, embeds, or integrations, let alone a consistent way of capturing light field content.

Though light field technology had been on computer visionaries’ radar since the early 1990’s, even the biggest players struggled to launch a tangible version to market. Lytro or OTOY have pioneered this market, but it seems kits and code are forever around the corner. Often light fields have been relegated to white papers, never finding their way into a commercial project.

There was no easy way to go get started without starting from scratch. Bummer.

What If We Made Our Own Light Field Renderer?

Never ones to shy away from a challenge, we created our own Light Field renderer in Unity. Cubicle Ninjas launched the first commercial implementation called ‘Impossibly NYX’ in partnership with Samsung and NYX Professional Makeup in December 2017.

What came as a surprise was that the current disadvantages of light fields are solvable problems today. In fact, after a bit of work, we believe they have been solved.

In our Part Two of our series on light fields we’ll talk about what we learned in building a light field renderer, how we’ve used it, how we’re capturing content, and (most importantly) why light fields are here to stay for keeps. Best of all, we’ll share what we think is the secret file format of light fields, which has a compression format built in, and is hidden right under our noses. Until next time!

References

We wouldn’t have fallen in love with this technology if it weren’t for the amazing and pioneering work below.

Light Field Rendering by Marc Levoy and Pat Hanrahan, SIGGRAPH ’96

Stanford’s Light Field Research

‘Light Field Imaging: The Future of VR-AR-MR’ by the Mark Bolas presented at Visual Effects Society

‘What is a Light Field’ by Lytro

‘What is a Light Field’ by OTOY

‘Work in progress: light field rendering in VR’ by Joan Charmant

SIGGRAPH

Visual Effects Society

All copyrights and trademarks owned by their respective authors.

Interested in Learning More About VR and AR?

We hope you’ve enjoyed this overview on light fields. If you’re still brimming with curiosity, check out why VR Customer Engagement is the future of client interactions. Learn about VR and Marketing. View our predictions for 2018 trends in VR or AR trends. Or explore 7 surprising facts about 360 video.

Nice Article! Would love to see links to the projects that you are using images from so your readers can dig deeper if they like.

Thanks Greg!

Good news: all of the images link out these projects, but you make a great point that we should call this out more clearly. We’ll update this very shortly and put an extra set of reference links at the bottom too. Thanks!

3/13 update – Links double-checked, captions added to all images sharing more detail authorship info, and a full reference section included for further reading.

Great article that does a fantastic job of explaining light fields in simple and understandable terms. Thx.

When can we expect part two?

Thanks Ted!

It looks like part two will be arriving in early May. We’re building a series of light field demos across multiple verticals showcasing some of our realizations, as some of these concepts are hard to parse without seeing real world contextual scenarios. Excited to share these!

Good article!

I waited for a couple of years, and OTOY, Google and other’s SDKs never arrived and have stopped being talked about.

Is part 2 ever coming? I’m really interested on this, particularly compression and capturing.